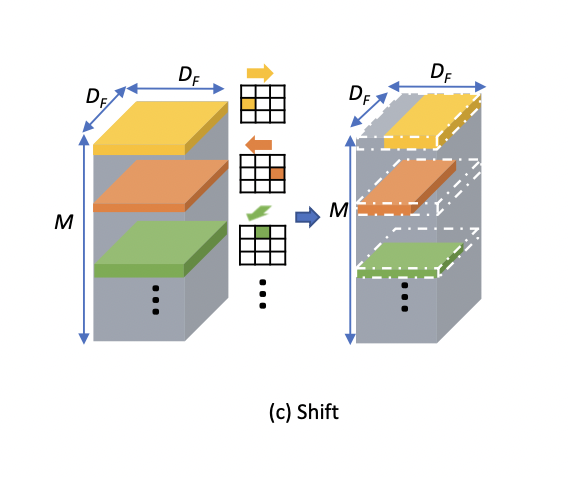

ShiftCNN shifts the channels based on the shift matrix(kernel)

这里有疑问,因为代码里面好像用的都是一个移动方向,只是对于不同通道进行了固定的移动,不管用多少次这个ShiftConv层,移动都是固定的(相同channel数据移动位置是相同的)

Shift

这个操作就是移动不同通道(移动方向是固定的,对于channel相同的数据来说)

文章

To reduce the state space, we use a simple heuristic: di- vide the M channels evenly into DK2 groups, where each group of ⌊M/DK2 ⌋ channels adopts one shift

(划分M和通道到M/D^2中。每M/D^2个通道共享一个shift的方向)

I.e.和e.g.都是拉丁语缩写,i.e.代表id est,意为“也就是说,即”(that is),e.g.代表exempli gratia,意思是“举个例子”(for example)

However, finding the optimal permutation, i.e., how to map each channel-m to a shift group, requires searching a combinatorially(组合地) large search space.

we introduce a modification(限制) to the shift operation that makes input and output invariant to channel order: We denote a shift operation with channel permutation π as Kπ(这里不是很明白,K代表的是通道置换),

G ̃=Pπ2(Kπ(Pπ1(F)))

wherePπi are permutation operators and◦ denotes operator composition(这里不明白,Pπ代表的是置换算子,但是Pπ(K)Pπ结合起来是整个Shift操作)

因为shift是离散的,因此加入point-wise convolution P1(F)来进行参数更新,离散的不好进行权重更新,同时shift matrix也不会更新

So long as the shift operation is sandwiched between two point-wise convolutions, different permutations of shifts are equivalent. Thus, we can choose an arbitrary permutation for the shift kernel, after fixing the number of channels for each shift direction.

(说Shift夹入两个PW中就,对于channel顺序就不影响了,事实上肯定会影响的)

This convolution comprises(包含) ..